How LLMs Learn: The Science Behind the Models

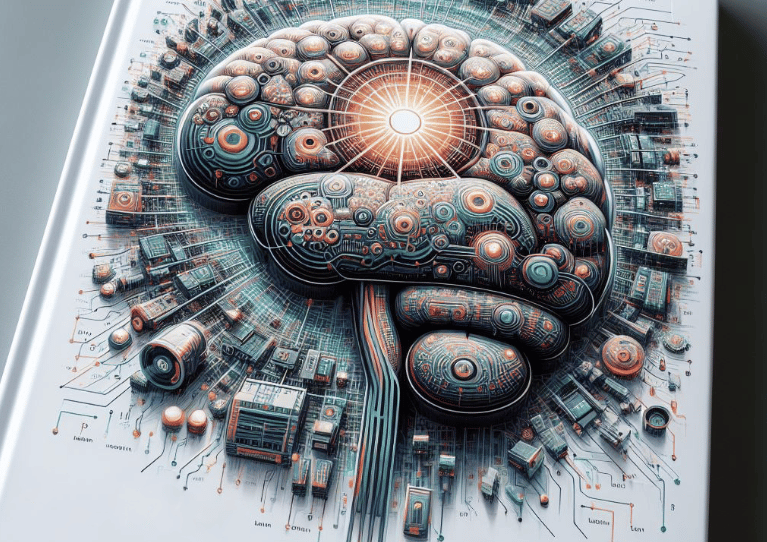

This article delves into the technical intricacies of Large Language Models (LLMs), exploring key concepts such as embedding, retrieval, and the role of machine learning and neural networks in their development. It highlights how these mechanisms enable LLMs to process and generate human-like text with remarkable accuracy, leveraging vast datasets to enhance AI's understanding and interaction with language.

TECHNOLOGYARTIFICIAL INTELLIGENCELLM

A deep dive into the technical workings of LLMs. This includes an overview of machine learning, neural networks, training processes, and the role of big data in model development.

Embedding and Retrieval

Embedding and retrieval mechanisms in large language models (LLMs) are pivotal concepts that illuminate the intricate ways in which AI processes and generates human-like text. At the heart of these mechanisms lies the principle of embedding, a technique that translates the vast and nuanced domain of human language into a mathematical space. This process involves representing words, phrases, and even entire documents as vectors — essentially, points in a high-dimensional space. The magic of embedding lies in its ability to capture semantic relationships; words that share similar meanings are positioned closer together in this vector space. This not only enables the model to understand the context and nuances of language but also facilitates a more nuanced interaction with the user, as the AI can grasp subtleties, idioms, and even cultural references.

Retrieval, on the other hand, is the process by which the LLM sifts through its embedded knowledge to find the most relevant information in response to a query. Imagine an immense library where each book (knowledge piece) is instantly accessible not by its title but by its content's essence. When a question is posed, the model employs its retrieval mechanism to navigate this library, pinpointing the vectors (books) that most closely align with the query's semantic footprint. This process is not merely about matching keywords but involves a deep understanding of the query's intent and context. By leveraging these embedding and retrieval processes, LLMs can produce responses that are not only relevant but also exhibit a surprising depth of understanding and creativity. These capabilities are at the forefront of the ongoing language revolution, enabling AI to communicate with an unprecedented level of sophistication and opening up new horizons for human-AI interaction.

Machine Learning Explained:

Machine learning, a subset of artificial intelligence, stands as a transformative force in the modern technological landscape. At its core, machine learning is about giving computers the ability to learn and make decisions from data without being explicitly programmed for specific tasks. This paradigm shift from traditional programming to data-driven learning has opened up new frontiers across various fields, from healthcare and finance to autonomous vehicles and, of course, natural language processing.

The Fundamentals of Machine Learning

The essence of machine learning lies in its ability to recognize patterns in data and make predictions or decisions based on these patterns. It begins with training a machine learning model using a dataset. This dataset is typically divided into two parts: the input data (features) and the output data (labels). For instance, in a simple email filtering application, the input data could be the text of the email, and the output data would be labels categorizing emails as 'spam' or 'not spam'. The machine learning algorithm uses this training data to learn the relationships between the input and the output. Once trained, the model can then make predictions on new, unseen data.

Types of Machine Learning

There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning. Supervised learning, the most common type, involves training a model on labeled data. The model learns to predict the output from the input data. Unsupervised learning, on the other hand, deals with unlabeled data. Here, the model tries to find patterns and relationships within the data itself. Reinforcement learning is a bit different; it involves training a model to make a sequence of decisions by rewarding it for good decisions and penalizing it for bad ones, much like training a pet with treats.

Impact on Language Processing

In the context of language processing, machine learning has been a game-changer. It has enabled the development of sophisticated models that can understand, interpret, and generate human language with remarkable accuracy. From simple tasks like spam detection to complex ones like sentiment analysis and language translation, machine learning algorithms have significantly enhanced the capabilities of language processing systems.

Machine learning, with its ability to process and learn from large datasets, has thus become a cornerstone of modern AI. Its applications in natural language processing are particularly striking, showcasing its power to not only process vast amounts of information but also to derive meaningful and contextually relevant insights from it.