How LLMs Learn: The Science Behind the Models

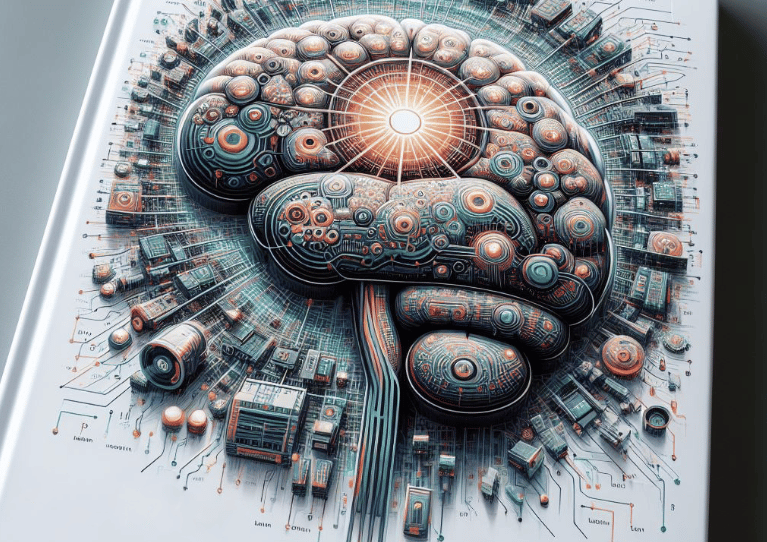

This article provides an in-depth look at neural networks, the fundamental technology behind advanced AI systems, particularly in natural language processing (NLP). It explores how these brain-inspired computational models, through layers of interconnected neurons and sophisticated learning processes, have revolutionized language processing tasks, paving the way for more advanced and nuanced human-computer interactions.

TECHNOLOGYARTIFICIAL INTELLIGENCELLM

Inside Neural Networks

Neural networks, a foundational concept in the field of artificial intelligence, are the engines that power many of today's most advanced machine learning systems, including those specializing in natural language processing (NLP). At their essence, neural networks are computational models inspired by the human brain, designed to recognize patterns and solve complex problems.

The Structure of Neural Networks

A neural network is composed of layers of interconnected nodes, or "neurons," which are similar in concept to the neurons in the human brain. Each neuron receives input, processes it, and passes on its output to the next layer of neurons. The network comprises three types of layers: the input layer, which receives the initial data; the hidden layers, where the processing and computation occur; and the output layer, which delivers the final result. The complexity and capability of a neural network are often determined by the number and depth of these hidden layers.

How Neural Networks Learn

The power of neural networks lies in their ability to learn from data. This learning occurs through a process known as "training." During training, the network is fed large amounts of data, and the weights of the connections between neurons are adjusted based on the accuracy of the network's output compared to the expected result. This process is often facilitated by a technique called backpropagation, where the error between the network’s prediction and the actual data is propagated back through the network, allowing the system to learn from its mistakes and improve over time.

Neural Networks in Language Processing

In NLP, neural networks have revolutionized how machines understand and generate human language. They enable the processing of large text datasets, learning the nuances and patterns of language. For instance, Recurrent Neural Networks (RNNs) and their more advanced variant, Long Short-Term Memory (LSTM) networks, are adept at processing sequences of data, making them well-suited for tasks like speech recognition and language translation. More recently, the development of transformer neural network architectures has further advanced NLP, allowing for more efficient and effective processing of language data.

The intricacies of neural networks and their ability to learn and adapt make them a cornerstone in the development of AI systems. As these networks become more sophisticated, so too does their ability to handle the complexities of human language, opening up new possibilities and applications in the field of NLP and beyond. Their continuous evolution marks an exciting journey in the quest to create machines that can think, learn, and understand in ways that mimic the human brain.