The Evolution of Language Models

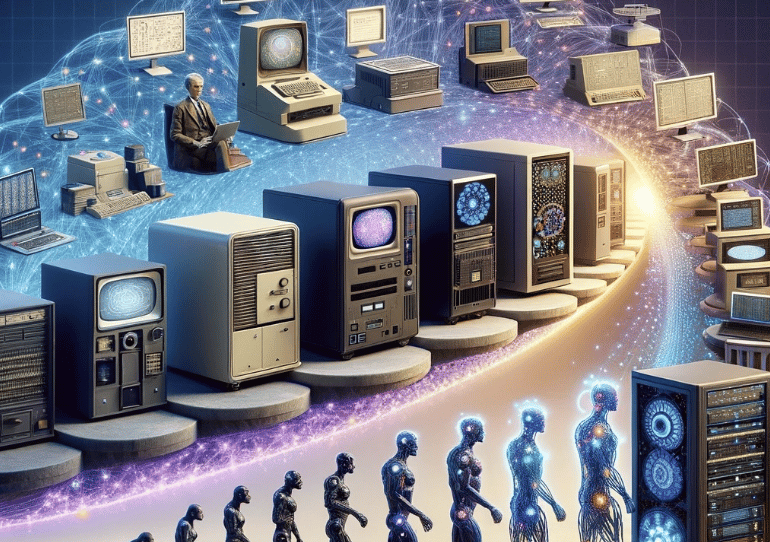

This article traces the evolution of language models from simple rule-based systems to advanced AI-driven neural networks, highlighting key milestones in Natural Language Processing (NLP). It explores how technological advancements have transformed language processing, setting the stage for the emergence of Large Language Models (LLMs) and marking significant progress in understanding and interacting with human language.

TECHNOLOGYARTIFICIAL INTELLIGENCELLM

This chapter traces the historical development of language models from simple rule-based systems to advanced neural networks. It explores key milestones in AI and NLP (Natural Language Processing), setting the stage for the emergence of LLMs.

From Rule-Based to AI-Driven Systems

The journey from rule-based to AI-driven systems in the realm of language processing is a story of remarkable technological evolution, one that has fundamentally transformed how machines understand and interact with human language. In the early stages of computational linguistics, rule-based systems were the cornerstone. These systems operated on a set of predefined rules and grammatical structures. Programmers would painstakingly input rules that dictated how words were to be processed and understood. While effective for specific, limited tasks, these systems lacked the ability to grasp the complexities and variances inherent in natural language. Their rigidity made them impractical for understanding language in its full richness and contextual depth.

The advent of machine learning heralded a new era in language processing. Instead of relying on a rigid set of rules, machine learning algorithms allowed systems to learn and adapt from actual language usage. This shift was significant; it meant that systems could now process and understand language based on patterns and examples rather than strict rule-following. The introduction of neural networks, particularly deep learning models, further accelerated this shift. These AI-driven systems could analyze vast amounts of text data, learning not just the rules of language, but also its nuances, idioms, and colloquialisms. They became adept at understanding context, a critical aspect of human language that had eluded rule-based systems.

This transition from rule-based to AI-driven systems marked a leap from the mechanical to the intuitive in language processing. AI-driven models, especially recent Large Language Models (LLMs), demonstrate an unprecedented level of fluency and understanding in language tasks, from translation to content creation. However, this evolution also brought new challenges, including the need for vast data to train these models and concerns around biases in that data. As we continue to advance in the field of AI and language processing, the lessons learned from the transition from rule-based to AI-driven systems guide us in addressing these challenges, ensuring that the technology not only becomes more sophisticated but also more aligned with the nuanced and dynamic nature of human language.

Key Milestones in NLP: Charting the Evolution

The field of Natural Language Processing (NLP) has experienced a series of groundbreaking milestones that have significantly shaped its current landscape. Understanding these key developments provides a window into how NLP has evolved from a fledgling area of study into a cornerstone of modern artificial intelligence.

Early Beginnings and Rule-Based Systems

The story of NLP begins in the mid-20th century, with the earliest experiments in machine translation during the 1950s. These initial forays, like the Georgetown experiment in 1954, marked the first attempts to use computers for translating languages. However, the real stride in NLP was made with the development of rule-based systems in the 1960s and 70s. These systems, although limited in their capacity to handle the complexity of human language, laid the foundational understanding of computational linguistics. They were based on sets of hand-coded rules to interpret sentence structure and grammar, setting the stage for more sophisticated future developments.

The Shift to Statistical Methods

A significant turning point came in the late 1980s and 90s with the introduction of statistical methods in NLP. This shift marked a move away from rule-based systems, allowing for more flexibility and adaptability in language processing. The use of statistical models, which learned language patterns from large corpora of text, brought about improvements in machine translation, speech recognition, and text processing. This era saw the development of key technologies like IBM’s statistical machine translation and the Hidden Markov Model, which became instrumental in speech recognition systems.